AMD’s stock jumped 23.71%, adding nearly seventy billion dollars in market value in a single day.

The catalyst? A computing partnership with OpenAI that most investors barely understood.

The deal gives OpenAI access to up to six gigawatts of AMD’s Instinct GPUs over the coming years. That number means little until you realize the scale: OpenAI has now committed to deals providing access to more than 20 nuclear reactors worth of computing power.

The financial mechanics reveal something more interesting than just another chip deal.

A New Model for Infrastructure Finance

AMD didn’t just sell chips to OpenAI. It handed them a warrant to purchase up to 160 million AMD shares at one cent per share.

The shares vest progressively as OpenAI scales deployment from one to six gigawatts. They also vest as AMD’s stock price crosses performance thresholds, climbing toward $600 per share.

Compute capacity has become a financial asset class. Infrastructure is being financed, securitized, and capitalized at the ecosystem scale.

This performance-based equity structure turns a vendor relationship into a mutual bet on AI’s future. AMD wins if it can deliver the chips and if its stock appreciates. OpenAI wins if it can deploy at scale and if AMD’s technology performs.

Both companies are now financially intertwined in ways that go beyond traditional supplier agreements.

The Revenue Math Doesn’t Add Up

OpenAI generates roughly $12 billion in annual revenue. The computing commitments it has signed this year approach $1 trillion across AMD, Nvidia, Oracle, and CoreWeave.

That ratio should make investors pause.

Even if these are options rather than firm commitments, the execution risk is substantial. Building out 20 gigawatts of computing infrastructure requires not just capital but power, cooling, real estate, and operational expertise at unprecedented scale.

The Roi acquisition adds another data point. OpenAI bought an investment startup focused on portfolio tracking and personalized financial advice. The company will wind down by October 2025, with CEO Sujith Vishwajith joining OpenAI.

The move signals OpenAI’s bet on personalization and consumer applications as revenue drivers. Bringing in meaningful consumer revenue becomes more critical when you’re burning through billions on data centers.

Circular Economics and Systemic Risk

The infrastructure buildout creates a tightly wound circular economy. OpenAI commits hundreds of billions to projects while suppliers like Nvidia and AMD invest directly into those same buildouts.

Critics have questioned whether this interdependency creates systemic risk. If any link in the chain weakens, the strain could propagate quickly.

AMD CEO Lisa Su told analysts that AI is on a ten-year growth path and that foundational compute is essential to realize it. Sam Altman clarified that the AMD partnership is incremental to OpenAI’s work with Nvidia, not a replacement.

The message: OpenAI is hedging rather than replacing its primary supplier.

What Investors Should Watch

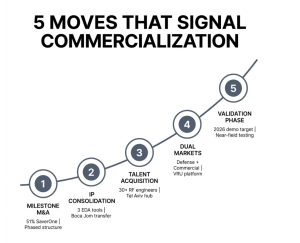

The AMD deal represents more than a chip purchase. It signals how AI infrastructure will be financed going forward: through performance-based equity, long-term capacity commitments, and deep vendor integration.

For AMD shareholders, the partnership validates the company’s position in AI compute and provides a path to tens of billions in revenue. The 23.71% stock jump reflects the market pricing in that potential.

For the broader market, the scale of OpenAI’s commitments raises questions about capital efficiency and execution risk. A company with $12 billion in annual revenue committing to nearly $1 trillion in infrastructure represents either visionary positioning or significant overreach.

The energy requirements alone are staggering. ChatGPT serves 2.5 billion queries daily across 700 million weekly users. Each query consumes power. Scaling to hundreds of billions of daily prompts by 2030 requires the infrastructure OpenAI is now securing.

The Roi acquisition fits this context. Consumer applications with recurring revenue become essential when infrastructure costs reach this magnitude. Personalized financial tools, life management features, and AI assistants represent potential revenue streams beyond enterprise API access.

The Execution Question

The financial innovation is clear. The strategic vision is bold. The execution risk is real.

OpenAI has structured deals that give it optionality on massive computing capacity without immediate capital outlay. The performance-based warrants align incentives with suppliers. The diversification across AMD, Nvidia, and others reduces single-vendor risk.

But the gap between $12 billion in revenue and nearly $1 trillion in commitments remains. The circular funding dynamics create interdependencies that could amplify stress if growth slows or if any major participant faces difficulties.

Investors analyzing AMD, Nvidia, or the broader AI infrastructure space should monitor how these commitments translate into actual deployments. The warrants vest based on real gigawatts deployed, not just signed agreements.

The market has spoken with AMD’s seventy billion dollar valuation increase. Now comes the harder part: proving the infrastructure can be built, powered, and monetized at the scale these deals envision.

The numbers suggest we’re either witnessing the foundation of AI’s next decade or the early stages of infrastructure overbuilding. Which one depends entirely on execution.